Artificial Intelligence Project: Detecting Phishing Websites

Student research project comparing classification models on a dataset of website characteristics.

Final report: [PDF]

GitHub Repo: [Link]

Why did I do this?

The coursework for the Introduction to AI module at university required us to apply different AI techniques to a "machine learning problem" of our choice in a group project and to compare and contrast ways to evaluate our chosen problem data. I wanted to choose a problem related to either web development or cybersecurity. This was an elective module, and I chose it because I wanted to learn more about artificial intelligence.

It should be noted, I began this module in late 2022, shortly before the release of ChatGPT which brought generative AI to mainstream attention. Previously, I was aware of GitHub CoPilot and had seen other AI-related projects from peers.

What resources/knowledge did I have?

In the lectures, we were taught the theoretical background of AI, classification, and regression models. During the tutorials, we gained hands-on experience with Jupyter Notebook and Spyder. We had access to public datasets from Kaggle and Google Dataset Search. Although I was comfortable with Python, the primary libraries we used were scikit-learn, Matplotlib, NumPy, and Pandas which I had not used before.

Method

This was a group research project that would produce a final report. We began by creating a GitHub repository and a shared Google Drive. The first step was to find a "machine learning problem" to apply artificial intelligence techniques. I found a dataset on Kaggle containing 11,055 data points, each listing 30 characteristics of a website (mainly of its URL) and labelling the website as either phishing or non-phishing.

We chose a classification problem based on this dataset. We trained various models on a fraction of the data points, tasking each model with predicting the labels of the remaining data points based on their characteristics.

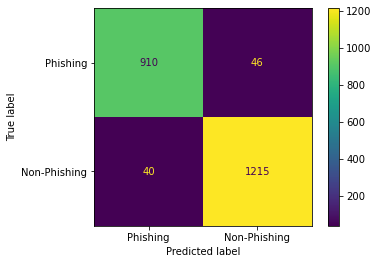

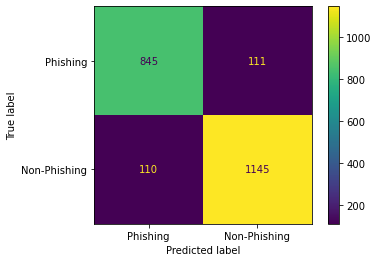

Using the sklearn.metrics module, we evaluated their effectiveness by generating confusion matrices and measuring accuracy. Additionally, we performed fine-tuning techniques to observe their effects on accuracy. Our project evaluated eight models, with each member responsible for two. I evaluated the Decision Tree and Naive Bayes models.

Outcome

Our dataset required minimal preprocessing, though its non-continuous nature (mostly consisting of -1s, 0s and 1s) impacted predictions for certain models. Surprisingly, the linear regression model achieved our success criteria of 90% accuracy, reaching 92% without major preprocessing.

We focused our experimentation on dropping columns before splitting the dataset into training and validation sets. Dropping the columns "having_Sub_Domain" and "double_slash_redirecting" improved accuracy, particularly for the support vector machine (87% to 92%) and Naive Bayes models (59% to 68%).

Overall, our models achieved varying degrees of accuracy, with most meeting our target of 90%. The perceptron and support vector machine models, which initially performed poorly, showed improved results after slight modification to the dataset. The random forest and decision tree models performed the best, achieving 97% and 96% accuracy, respectively, on the unedited dataset (with only the id column dropped).

Below are some of the confusion matrices that were produced as part of my work. Each one has predicted 2211 data points.